Is MCP for humans or agents?

Are we all thinking about this the wrong way?

I recently went to a meetup in New York on the topic of Model Context Protocol (MCP) and how companies are using it in productive ways (special thanks to Alex Rattray of Stainless for being kind enough to host it). Amongst the illustrious panel were engineers from Cloudflare, Stripe, Stainless, and others. These are established players who are actively experimenting on how to make MCP a practical reality.

What I came to realize halfway through was that it’s not that companies or their AI Agents that are using MCP but actually humans at those companies using MCP. They are trying to make MCP useful internally for their human teams and externally for their human customers.

TL;DR — humans use MCP not agents. For now (06/27/2025).

Where and how are humans using MCP?

Obviously, MCP, like any other machine protocol, is designed for computer/agent use. Humans are not designed to read JSON-RPC. To bridge the protocol <> human divide, engineers at these companies are connecting MCP servers to their agentic IDEs (like Cursor or Windsurf) and AI Apps like Claude. And then every internal API is wrapped with an MCP to make it available for use.

Now an engineer can pop open their IDE copilot and “chat” with an internal API they use to practically test how to interface with it before they build anything.

Ex. explore how to use the Products API or exploring the schema of a DB before writing any SQL.

If you can do this type of exploration and use, obviously Cursor can generate better code interfacing with it in the same way. Makes total sense. This is the productivity ladder for engineers within these companies.

So what does this mean for the rest of us? Well if you’re an engineer inside a company that hasn’t yet experimented with “AI”, writing an MCP server for an API that you interface with could be a great way to speed yourself up and use it practically. And the best part is when you decide to build an agent to do things for you, it can use the same rails.

So when does AI use MCP?

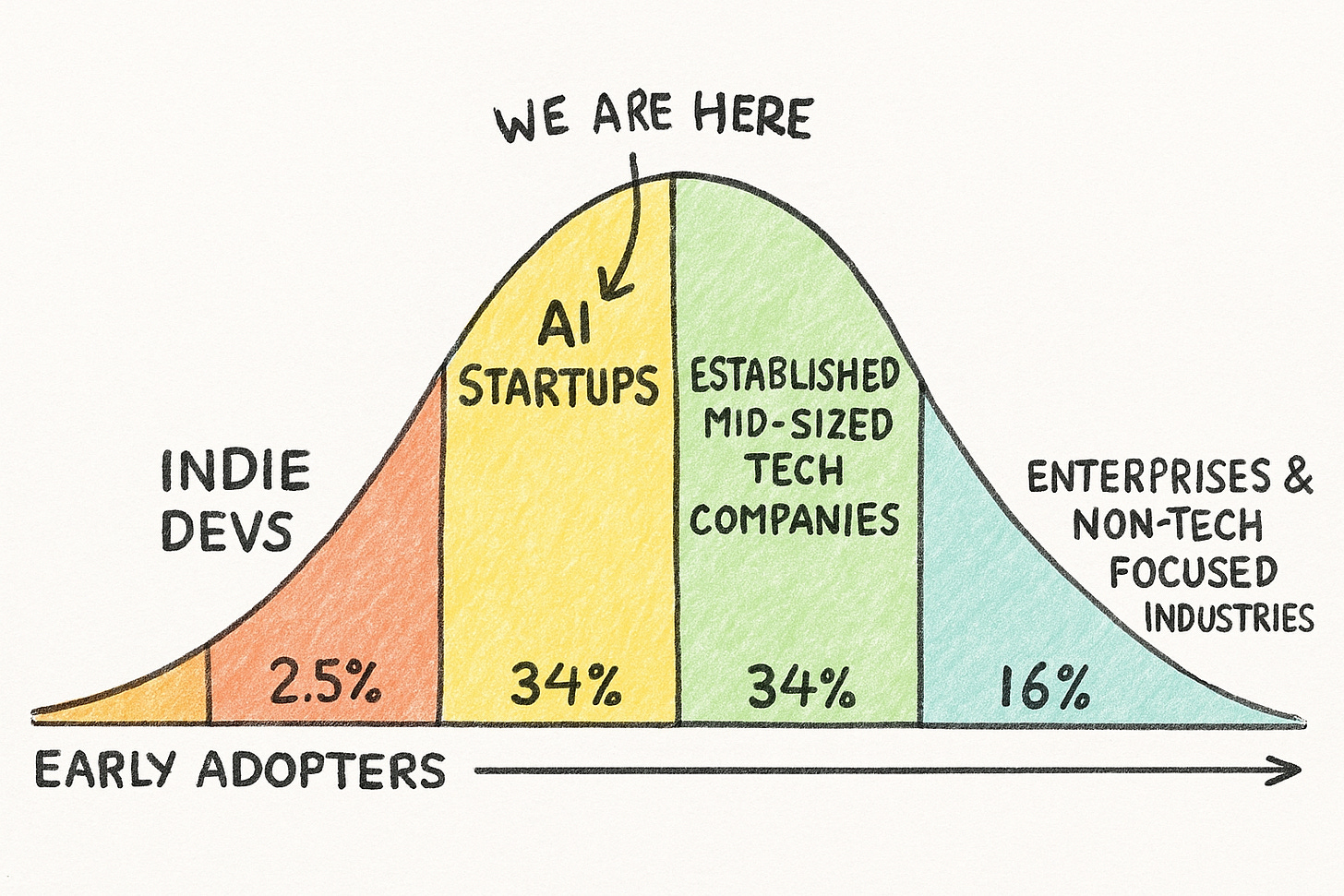

Putting aside all the hype of MCPs and agents, we should consider where we are in reality. The best way to figure this out (in my humble opinion) is to consider where we are in the adoption curve (in terms of which companies/users are using it). Thanks to ChatGPT I could draw this out in a few seconds:

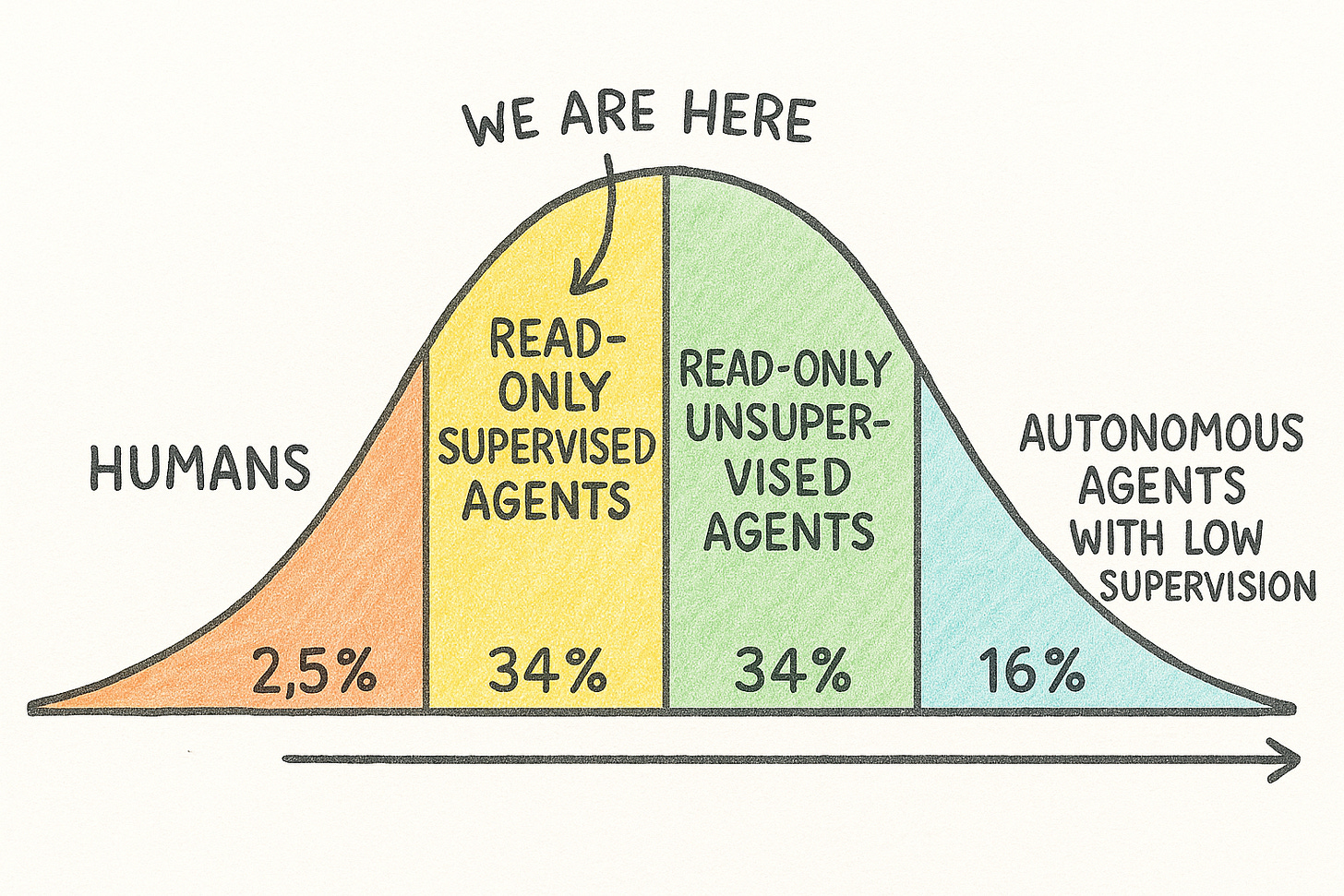

The dream of AI is getting to a world of unsupervised agents doing productive work for us in the background and I do believe MCP will be a large part of facilitating this but we aren’t there yet. I’m going to propose another adoption curve. This one shows how MCP is being used and where it can go.

Humans will need to be front-and-center to build the tools to get us all the way to the far end of the adoption curve. We need better authorization for MCPs, guardrails for tool-use, better tool-selection to reduce hallucinations, and more. The dream of unsupervised agents is attainable but it will require some practical solutions first.

I’m a human. How do I get started?

For the absolute beginners

Skim the spec: https://modelcontextprotocol.io/introduction

Try out an MCP in your agentic IDE. I’d recommend the following ones to get started:

Proxy the MCP transport to these servers using mcp-remote and add it to your IDE’s mcp.json configuration.

Open copilot chat and start asking it to use tools!

If you want to try out MCPs immediately without setup

Join the free toolprint.ai sandbox which exposes 71 tools across 8 MCPs and start using it in Cursor. Start crafting “toolprints” (workflows that use these mcp tools across servers) — in 2025’s newest programming language: English.

(as one of toolprint’s authors, I’m not at all suggesting that it may be the easiest option here — that would be atrocious!)

I’ll leave you with an idea that Andrej Karpathy proposed in his latest talk — LLMs are like “people spirits”. We have designed them to mimic human behavior. If we can use MCPs today to make ourselves more productive, it is inevitable that the “people spirits” will use them similarly to usher in a world of productivity we could only dream of.